This is a very big year for world politics. There are more than 64 countries (plus the EU) holding elections in 2024, representing 49% of the world’s population. The US elections are drawing ever closer, and all the while the use of AI is more widespread than ever. How will the growing prevalence and use of AI influence this monumental year in politics?

AI has been accused of disrupting politics by “blurring the walls of reality”. Karl Manheim and Lyric Kaplan suggest that AI threatens elections and democratic institutions by:

- Posing a cyberthreat to elections

- Using psychographic profiling to influence voters

- Promoting fake news

- Contributing to the demise of trusted institutions

Cyberthreats to Elections

The 2016 US political cycle was defined by the Russian intelligence interventions in the election by attempting to penetrate election software and equipment in 21 states. Not only were cyberattacks launched against the voting software, but campaign finance databases and the emails of over 100 election officials were accessed.

Cyberattacks are not a new phenomenon, but AI has exacerbated their prevalence by allowing hackers to more effectively target victims and defeat cyber defenses. It must be acknowledged that AI can also be used to improve cyber defenses. However, as hackers invest in more sophisticated AI-powered software, the US appears to be cutting funding into AI powered cyber defenses. Ultimately, the playing field isn’t even and we are seeing AI being used a lot more to attack than to defend.

Psychographic Profiling

Psychographics profiling involves sorting individuals into different groups based on shared psychological characteristics, such as personality traits, attitudes, interests etc. In politics psychographic profiling combined with AI software has been used to influence voter behavior by sending targeted messages or posts on social media.

In 2017 claims were made that “artificial intelligence has already ‘silently [taken] over democracy’ through the use of behavioral advertising, social media manipulation, bots and trolls”. A well-known instance of psychographic profiling occurred in 2010 when the personal data of millions of Facebook users was collected by the British consulting firm Cambridge Analytica and used for political advertising. Now, claims go as far as to suggest that Facebook could determine the results of an election without anyone ever finding out.

In fact, developments in AI have the potential to take psychographic profiling to the next level. In the 2020 US presidential election hundreds of BAME individuals received robot calls warning them that if they voted by post their information could be used by the police to track down individuals for outstanding warrants, by debt collectors and even by the CDC for mandatory vaccines. The perpetrators used postcodes to target specific ethnicities and discouraged many from voting in this way. Thus, creating a decisional interference and skewing the demographic of voters.

Joycelyn Tate (a technology policy expert) argues that AI has made this problem all the more serious as individuals with outstanding debts, for example, can be micro-targeted. Furthermore, actors’ voices are no longer needed to carry out these calls and AI-voice generation software can even replicate the voices of trusted members of the community.

Fake News

Fake news is rife across the political landscape. In 2016 we may have worried about the Russian government doctoring photos and releasing them online, now anyone can create a fake realistic photo in seconds. Generative AI tools that can create fake images, text, videos or sound recordings actively promote rumor cascades and other “information disorders”. Alla Polishchuk has described X as a “swamp flooded with disinformation excused by Musk as exemplifying ‘freedom of speech’”.

Polishchuk has further criticized X for its release of an image generation textbox 2 months before the next election, she has claimed that it has the potential to be highly disruptive and worsen the increasing invasive existence of fake images across X. The main problem with the pervasive existence of false information is that it paralyses people into indecision, and consequently reduces voting numbers.

The inability to determine what is real and what is fake also creates the “liar’s dividend”: whereby an increase in manipulated content results in general skepticism of all media, making it easier for people such as politicians to dismiss authentic images, audio or video as fake. For example, Trump recently dismissed a genuine image of one of Harris’s campaigns as AI-generator when it was in fact real. But if you look at the last month in US politics, who would blame a person for thinking that it was impossible that in the same week Biden accidentally introduced Zelensky as Putin and there was an attempted assassination on Donald Trump. Even just one of those events alone is hard to believe. The truth is not always more believable than the lies.

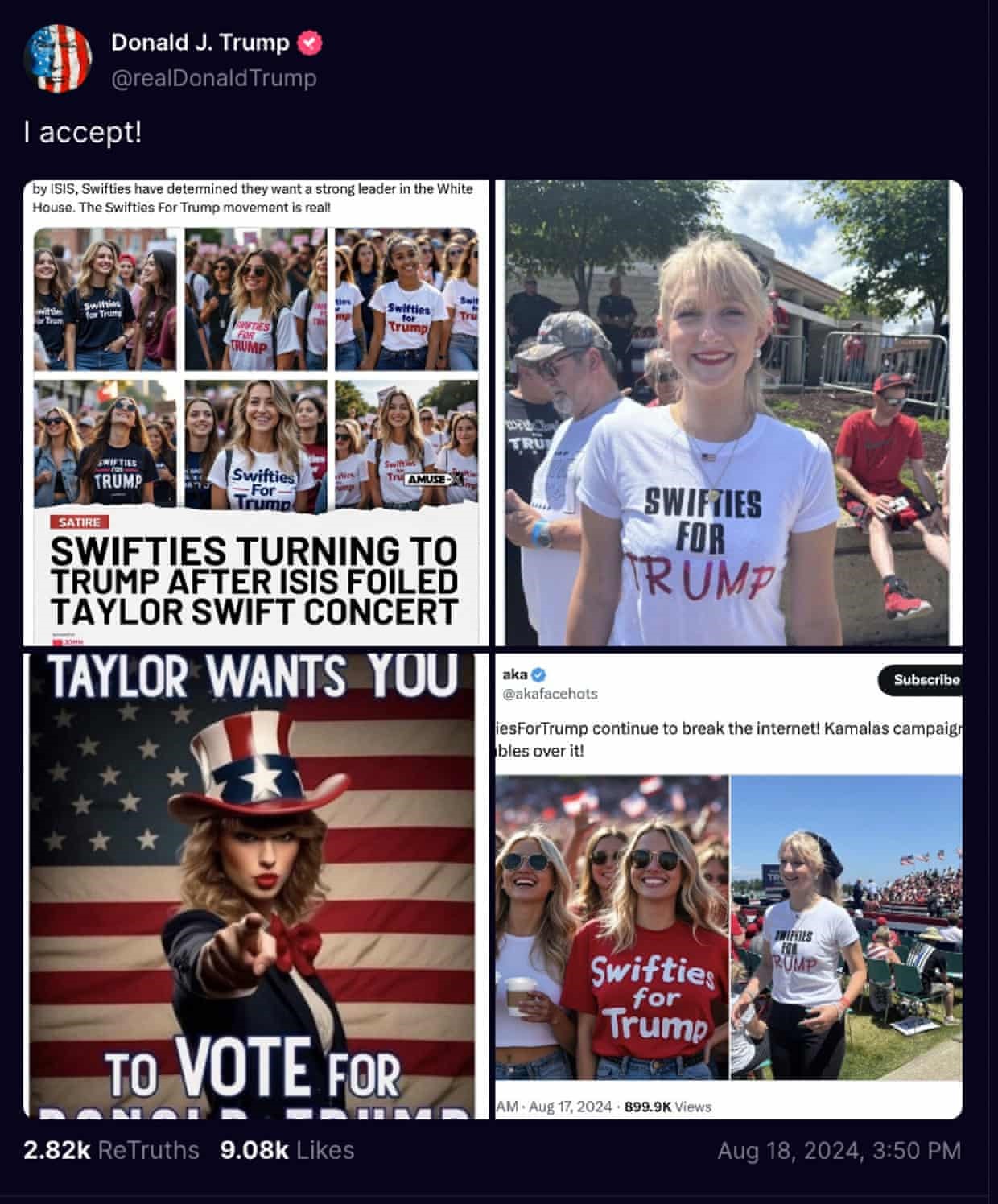

Perhaps even more concerning is the weaponization of fake images and content by politicians. Donald Trump is particularly notorious for using this tactic. Recently, he re-posted fake images of Swifties (Taylor Swift fans) purportedly promoting the Trump campaign. Trump has also shared fake images that depicted Kamala Harris as holding a communist rally at the Democratic national convention and a deepfake video of him dancing with the X owner Musk.

These events aren’t just isolated to Trump or the US election campaign. In the run up to the presidential elections in Argentina both competing teams employed AI to launch attacks on one another. Even the Russian government uses deepfakes to discredit Putin’s political opponents, a tactic less impactful given they already use authoritarian means of political repression and control over would-be candidates’ access to the ballot.

Demise of Trusted Institutions

The US was recently demoted from a full democracy to a flawed democracy due to the “erosion of confidence in the government and public institutions”. Of course, the fault cannot be attributed to AI alone, but the contribution to the erosion of transparency, accountability and fairness in politics cannot be understated. Even if we put aside instances where AI is not being used deliberately as a weapon, Albert Fox Cahn suggests its use to convey genuine government announcement or reach out to voters in regional dialects you can’t actually speak sets a dangerous precedent.

Cahn argues that when it’s been used for genuine government reasons then you educate the public with the belief that deepfakes are reliable and should be trusted. “There is huge potential to use deepfakes to really undermine trust in government and trust in our institutions.”

Limiting the negative effect of AI

It should be noted that a lot of the negative effects or harmful application on politics tend to exist already and are merely exacerbated by AI. But if there are ways to limit the damage that AI can have on the world of politics then for the sake of preserving and promoting democratic institutions and fair elections, then these should be implemented.

Companies like YouTube have announced that they will require content made with a generative AI to be self-labelled. While this is a step in the right direction, it does not help to diminish the effects of those who are purposefully using generative AI for harm. In this case, AI tools which automatically detect content created by another AI would be extremely useful. Some of these tools exists already, and many providers in audio detection claim their tools are over 90% accurate in differentiating between real audio and AI-generated audio. However, not only is the technology not advanced enough to detect these fakes at a large scale but it’s also not accurate enough to provide the clarity required.

It is also important to note that fake content detection is only useful if you have active moderators prepared to remove this information from a site. While some social media sites are claiming they will ramp up their content moderation teams ahead of elections, Joycelyn Tate insists that these moderation systems must always remain ‘ramped up’. The way that fake information dissimulation works is it does not just start when the election session starts, there is a strategy to how disinformation is spread and it is usually a lengthy process involving joining groups as a trusted long-term voice.

Therefore, not only do we need technical advancement and investment in deepfake detections and tools which use AI for good in politics. But we also need governments to create legislation which promotes and requires these giant tech companies to invest more heavily in content moderation tools and to promote the truth above all.

Written by Celene Sandiford, smartR AI

p.s.

Fun fact: Bing’s image generator platform refused to create a deepfake cartoon of Trump and Kamala holding hands.